An Ultimate Guide to Machine Learning Tech Stack

Machine learning is making a powerful impact across industries by allowing systems to learn from data and make intelligent predictions. To bring these models to life—right from building and training to deployment—a thoughtfully chosen set of tools and technologies, known as the “Machine Learning Tech Stack,” plays a crucial role. In this guide, we’ll explore each component, its importance, and how every stage contributes to smooth and successful ML development.

Overview of the Machine Learning Tech Stack

The machine learning tech stack brings together a powerful set of tools, frameworks, and platforms that support the entire journey of developing, deploying, and managing ML models. From handling data to building models and ensuring smooth deployment and monitoring, each layer plays a valuable role.

Choosing the right tech stack can make a big difference—it streamlines workflows, boosts efficiency, and sets the stage for scalable, high-performing ML solutions. As machine learning services continue to grow in complexity and reach, a strong tech stack helps teams work more smoothly and achieve better, more consistent results.

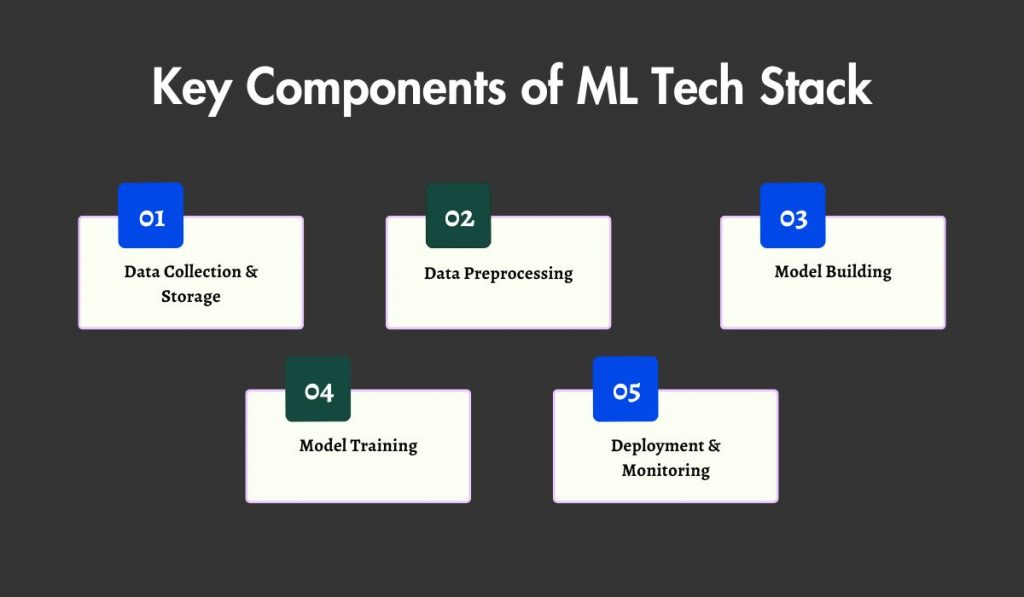

Key Components of ML Tech Stack

The machine learning tech stack is made up of several essential layers, each serving a distinct purpose in the overall ML workflow. Together, they help streamline development, improve efficiency, and support long-term scalability.

-

Data Collection & Storage

Every machine learning project begins with data. High-quality data collection and storage systems form the foundation of accurate, dependable models. Cloud-based solutions like Google Cloud Storage, AWS S3, and distributed systems such as Hadoop enable organizations to manage large datasets with ease. These tools allow seamless scalability and fast access, which are critical for supporting growing data needs.

-

Data Preprocessing

Once data is collected, it must be prepared before being used for model training. This step includes cleaning, normalizing, and transforming raw data into a structured format.

Tools such as Pandas, Apache Spark, and DataWrangler help simplify this process, ensuring that data is consistent, reliable, and ready for analysis. Effective preprocessing lays the groundwork for building accurate models.

-

Model Building

Model building is where the real magic happens. This stage involves selecting appropriate algorithms and using frameworks to develop intelligent systems capable of learning from data.

Well-established platforms like TensorFlow, PyTorch, and Scikit-learn provide powerful functionalities that support both beginners and experienced developers. These tools make it easier to experiment, test, and build high-performing models tailored to different use cases.

-

Model Training

Training is a critical step in refining how a model behaves. By tuning parameters and feeding in quality data, models learn to make better predictions over time.

For larger or more complex models, the use of resources like Weights & Biases, MLflow, and orchestration platforms such as Kubernetes makes training more efficient.

These systems help track experiments, manage configurations, and scale computational power as needed.

-

Deployment & Monitoring

After a model has been trained and validated, it’s ready to be deployed. Deployment brings the model into production, where it can start generating real-world value.

Solutions like Docker and Kubernetes simplify this transition by offering consistent environments across development and production. Once deployed, monitoring tools such as Prometheus and Grafana help keep an eye on performance metrics, ensuring the model stays reliable and effective over time.

Upgrade Your ML Projects with the Perfect Tech Stack

Leverage the latest tools and technologies to accelerate your machine learning initiatives and gain a competitive edge.

Relevance of Each Components in ML Development

Each layer within the machine learning tech stack serves a unique and essential purpose, directly influencing the success of any ML initiative.

- Data Collection & Storage is the first step, ensuring that clean, well-structured data is readily available. Reliable access to quality data forms the backbone of effective model training and helps avoid issues later in the process.

- Data Preprocessing transforms raw information into a usable format by cleaning, organizing, and normalizing it. This step is vital for reducing errors and inconsistencies that could impact model performance.

- Model Building is where ideas take shape—models are developed, evaluated, and fine-tuned to identify the best fit for the problem at hand. This stage lays the groundwork for intelligent systems capable of making accurate decisions.

- Model Training takes those models and strengthens them through repetition and learning. By iterating over data, models continuously improve, becoming more accurate and dependable for real-world use.

- Deployment and Monitoring bring everything together, allowing the model to be used in practical applications. At the same time, performance tracking ensures it remains stable, responsive, and aligned with expectations over time.

When each of these layers is optimized, the entire ML workflow becomes more efficient and effective. It reduces development time, minimizes potential errors, and ultimately leads to smarter, more scalable solutions.

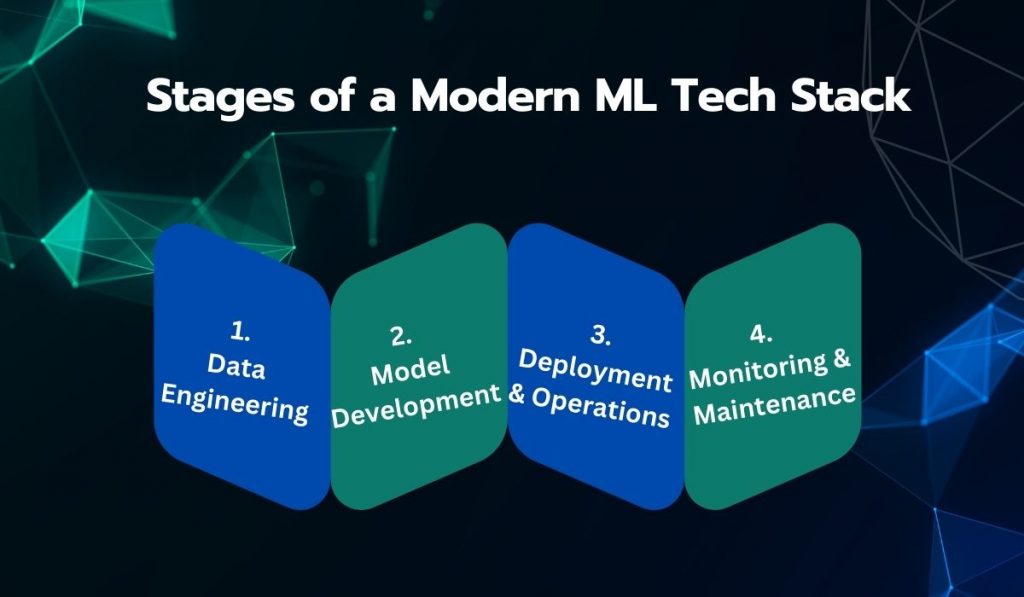

Stages of a Modern Machine Learning Tech Stack

A machine learning project typically unfolds in several important stages. Each stage plays a vital role in the success of the overall workflow and relies on specific technologies to move smoothly from one phase to the next.

Data Engineering

The process begins with data engineering, where raw data is collected, cleaned, and transformed into a usable format. This stage is all about building a strong foundation.

Whether it’s handling massive batches or processing real-time data streams, tools like Apache Kafka, Hadoop, and Spark help teams efficiently manage complex data pipelines. When this stage is done well, it sets the tone for a seamless transition into model development.

Model Development

Once the data is ready, the focus shifts to developing the machine learning model itself. This is where experimentation begins. Teams explore different algorithms and approaches to find the best fit for their specific needs.

Platforms such as Scikit-learn, TensorFlow, and Keras provide the flexibility and functionality to design, test, and refine models effectively. With these tools, it’s easier to move from idea to implementation while keeping performance at the forefront.

Deployment & Operations

After the model has been built and validated, it’s time to bring it into the real world. Deployment involves making the model accessible within applications or systems, often using containerization and orchestration platforms like Docker, Kubernetes, and Flask.

At this stage, MLOps practices come into play—ensuring that deployment pipelines are repeatable, scalable, and easy to manage. Tools such as Kubeflow and MLflow support these workflows, making it easier to maintain consistency across environments.

Monitoring & Maintenance

Deployment isn’t the end of the journey. Continuous monitoring is essential to make sure the model stays accurate and performs well over time.

Tracking tools like Prometheus and Grafana allow teams to detect performance drift or system issues early, so they can act quickly and maintain the model’s integrity. This proactive approach helps organizations stay confident in their ML applications as conditions evolve.

Future Trends in ML Tech Stack

The machine learning tech stack is evolving rapidly to keep up with the growing demands of modern applications. With innovation driving change across industries, several trends are reshaping how ML systems are built, deployed, and maintained.

Automation of ML Workflows

Automation is becoming a game-changer in the world of machine learning. With the rise of MLOps and AutoML, teams can now streamline repetitive and time-consuming tasks like data preprocessing, model training, and evaluation. This approach not only minimizes human error but also accelerates development cycles and improves collaboration across teams.

Tools such as AutoML platforms, Kubeflow, and integrated services in cloud environments are making it easier to manage end-to-end machine learning pipelines at scale.

Embracing Cloud-Based ML Services

As machine learning models grow more complex and require greater computational power, the shift toward cloud-based solutions continues to gain momentum. Services offered by platforms like Google Cloud AI, AWS SageMaker, and Azure Machine Learning give teams the ability to develop, train, and deploy models without the overhead of managing physical infrastructure.

These platforms bring scalability, flexibility, and cost-efficiency—making advanced ML capabilities accessible to organizations of all sizes.

AI-Assisted Model Development

Artificial intelligence is now playing a supporting role in building machine learning models themselves. AI-powered tools can automatically select algorithms, tune hyperparameters, and optimize model performance with minimal manual intervention.

This not only improves accuracy but also frees up valuable time for data scientists to focus on strategic tasks. Solutions like H2O.ai, DataRobot, and SageMaker Autopilot are leading the way in helping teams create more accurate models faster.

The Rise of Edge Computing

Machine learning is no longer confined to powerful cloud servers. With the growth of IoT and mobile technology, there’s a significant push toward deploying ML models directly on edge devices. This shift enables real-time processing and decision-making without the latency associated with cloud communication.

Platforms like TensorFlow Lite and AWS IoT Greengrass allow models to run efficiently on smaller devices, making it possible to bring intelligent capabilities to a wide range of environments.

How does BigDataCentric streamline ML deployment for businesses?

At BigDataCentric, we specialize in simplifying the deployment of machine learning models, ensuring that businesses can seamlessly integrate them into real-world applications. Our approach focuses on optimizing the entire deployment pipeline—from automating model deployment to continuous monitoring and maintenance.

We leverage modern tools and practices like MLOps, cloud services, and containerization platforms to ensure that your models are not only deployed efficiently but also scale effortlessly as your business grows.

By using robust platforms like Kubernetes, Docker, and cloud-based solutions, we ensure that models are production-ready and can handle the demands of dynamic environments.

Additionally, our team prioritizes monitoring and performance tracking, helping businesses maintain model accuracy and stability over time. With BigDataCentric’s support, you can focus on innovation while we handle the complexities of ML deployment, ensuring that your models deliver value from day one and evolve with your business needs.

Build Your Machine Learning Tech Stack with Experts

Leverage our expertise to select and implement the best ML tools and technologies for your project success.

The Sum Up

The machine learning tech stack is essential for building, deploying, and maintaining effective ML models. Each stage, from collecting and processing data to monitoring the performance of deployed models, plays a crucial role in ensuring the success of the project.

As the field evolves, innovations like MLOps, AutoML, cloud-based services, and edge computing are transforming how we approach machine learning, making it more efficient and scalable.

By carefully selecting the right tools and platforms for each phase of your ML pipeline, you can ensure that your applications are not only successful but also scalable, efficient, and capable of meeting the demands of a rapidly changing technological landscape.

This thoughtful approach to your tech stack is key to unlocking the full potential of machine learning in your business.

FAQs

-

Why is it called a Tech Stack?

A tech stack refers to a combination of technologies, frameworks, and tools that work together to create an application or system. In machine learning, a tech stack is the integrated set of tools used to build, train, deploy, and maintain ML models.

-

What is the tech stack process?

The tech stack process involves selecting, implementing, and integrating the tools and technologies required for building and operating machine learning models. This process covers data handling, model development, deployment, and ongoing monitoring.

-

What is the AI Tech Stack?

The AI tech stack includes the tools, libraries, and frameworks used to develop artificial intelligence systems, such as machine learning models, natural language processing applications, and computer vision systems. Popular components include TensorFlow, PyTorch, and Scikit-learn for model building, and cloud platforms like AWS and Azure for scalability.

About Author

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

Toggle