NLP vs LLM: Which Language Model Suits Your Needs?

Artificial Intelligence (AI) has completely transformed the way we interact with technology, especially in understanding and processing human language. Two key players in this transformation are Natural Language Processing (NLP) and Large Language Models (LLMs).

While both terms are often used interchangeably, they have distinct roles in AI-driven language understanding. NLP has been around for decades, serving as the foundation for language-based AI applications such as speech recognition, machine translation, and text analysis. LLMs, on the other hand, represent the latest advancements in AI, powered by deep learning, and are capable of context-aware responses, content generation, and human-like conversations.

But how NLP vs LLM they differ? What are their strengths and limitations? And how do businesses decide when to use NLP, LLM, or a combination of both?

This blog will explore the differences between NLP and LLM, their core functionalities, applications, benefits, and how they are shaping the future of AI-driven interactions.

What is Natural Language Processing (NLP)?

Natural Language Processing (NLP) is a branch of AI that helps machines read, understand, and respond to human language. It acts as a bridge between everyday communication and machine data, using a mix of AI, computer science, and computational linguistics. By recognizing patterns in data, NLP algorithms convert human language into a format that computers can process effectively.

NLP is built on three key components:

- Syntax Analysis helps machines grasp sentence structure.

- Semantic Analysis focuses on understanding the meaning behind the text.

- Sentiment Analysis determines the emotions or opinions expressed in a piece of text.

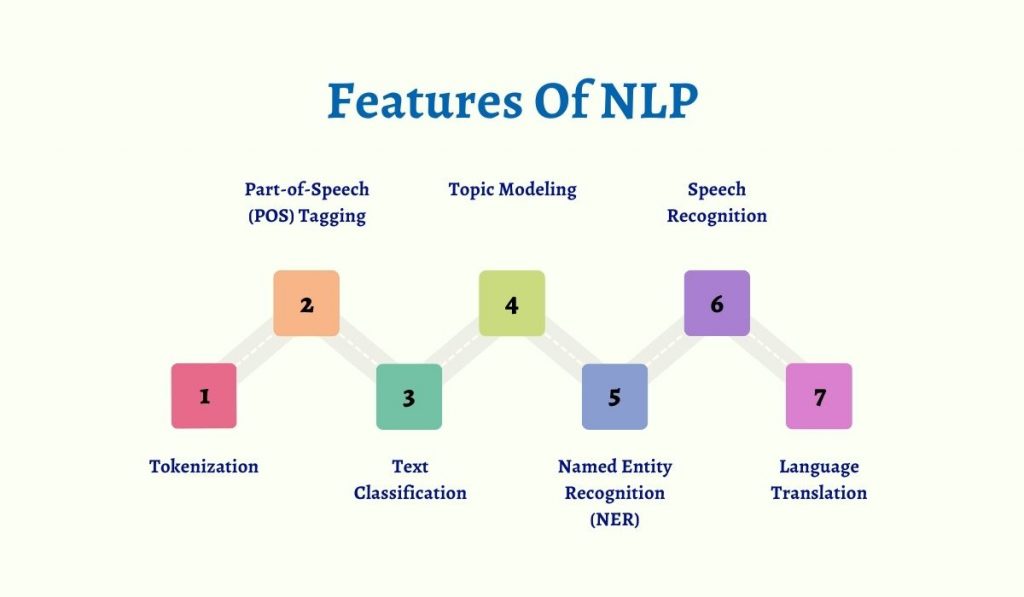

Features of NLP

-

Tokenization

Splitting text into words, sentences, or phrases for processing.

-

Part-of-Speech (POS) Tagging

Identifying nouns, verbs, adjectives, etc., in a sentence.

-

Text Classification

Categorizing text into predefined labels (e.g., spam detection).

-

Topic Modeling

Identifying the main topics in a large text corpus.

-

Named Entity Recognition (NER)

Detecting names of people, places, organizations, and dates.

-

Speech Recognition

Converting spoken language into text.

-

Language Translation

Converting text from one language to another using machine translation.

NLP Applications

NLP is everywhere in our digital world. Here are some common use cases:

-

Voice Assistants

Apple’s Siri and Amazon’s Alexa use voice recognition to understand user questions and provide helpful answers. People often rely on these assistants for everyday tasks like checking the weather, searching for information online, or adding items to their to-do and shopping lists.

-

Email Filters

Email spam filters use NLP to spot suspicious words or phrases, flagging potential spam and moving it to the spam folder. Services like Gmail go further, using machine learning to categorize emails into primary, social, promotions, updates, or forums based on sender, content, and similar messages.

-

Predictive Text

If you’ve ever used autocorrect or autocomplete while texting on your smartphone, you’ve seen NLP in action. These features are a game-changer for both professionals and everyday users.

Autocorrect detects misspellings and suggests corrections based on dictionary words, while autocomplete predicts the next likely word based on the sentence context, making typing faster and more efficient.

-

Translation Applications

Traveling to a foreign country can be daunting if you don’t speak the local language. NLP powers tools like Google Translate, making it easier to communicate and bridge language gaps effortlessly.

What is Large Language Models (LLM)?

Large Language Models (LLMs) are advanced AI models designed to understand and generate human-like text. They are trained on massive, high-quality datasets containing billions of words from books, websites, articles, and other online sources. Unlike traditional NLP models that simply interpret language, LLMs predict what comes next in a conversation based on previous context.

Key components of an LLM include:

- Tokenization: Splits text into smaller units (tokens) for processing.

- Embedding: Represents tokens in a way that captures their meaning and relationships, helping the model understand context.

- Attention Mechanisms: Particularly self-attention, which analyzes how words relate to each other to determine their importance.

- Pretraining: Exposes LLMs to vast amounts of language data, helping them learn grammar, facts, and patterns.

- Fine-Tuning: Refines the model using specific datasets or tasks to enhance its performance in a particular area.

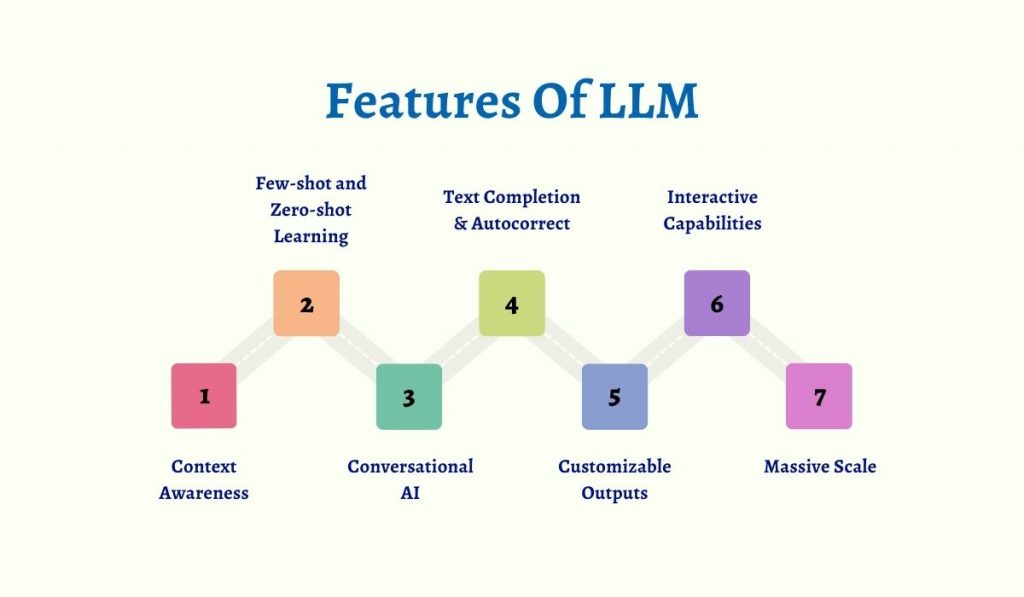

Features of LLM

-

Context Awareness

LLMs retain and use context across longer conversations or documents.

-

Few-shot and Zero-shot Learning

They can perform tasks with minimal examples (few-shot) or even without prior training data (zero-shot).

-

Conversational AI

LLMs power chatbots and virtual assistants with near-human conversational ability.

-

Text Completion & Autocorrect

They assist in predictive typing and error correction.

-

Reasoning & Logic

Advanced LLMs can solve problems, perform logical reasoning, and answer complex questions.

-

Multimodal Capabilities

Some LLMs can process and generate text along with images, audio, and even video.

-

Code Generation & Debugging

They assist in writing and debugging code in various programming languages.

LLM Applications

LLMs power cutting-edge applications, such as:

-

Content Creation

LLMs can support a wide range of content creation tasks, from generating outlines for articles or meetings to helping with character development for stories.

They’re also useful for teachers creating lesson plans, travelers planning itineraries, and even brainstorming social media content. These tools serve as a great starting point, allowing users to refine ideas with their own insights, creativity, and personal touch.

-

Code Generation

LLMs can assist developers in generating code by understanding the context of a given input and predicting the desired outcome. They can work with predefined templates, conditional statements, or loops to complete coding tasks efficiently.

Additionally, LLMs can help identify potential bugs and syntax errors by applying best practices and coding standards learned during training.

-

Customer Service Chatbots

Lead Chatbots are valuable across different industries, especially in eCommerce, where they help businesses provide quick responses, reduce delays, and boost sales. They’re great for handling specific tasks like answering FAQs, retrieving order details, and scheduling appointments or meetings efficiently.

-

Sentiment Analysis

LLMs can analyze customer reviews to assess overall sentiment toward a product or service. Instead of manually reading each review, businesses gather feedback from review sites, social media, and other platforms, then process it using a pre-trained or fine-tuned model.

The LLM identifies patterns in the text and assigns a sentiment score, helping organizations monitor public perception and enhance their social media strategy.

Key Differences: NLP vs LLM

| Feature | NLP | LLM |

| Scope | Processes text & speech | Generates human-like text |

| Training Approach | Rule-based & statistical | Deep learning-based |

| Context Awareness | Limited | Retains long-term context |

| Scalability | Moderate | Highly scalable |

| Computational Power | Lower | High processing power required |

| Use Cases | Translation, POS tagging | Chatbots, content creation |

In-Depth Comparison of NLP vs LLM

Scope of Application

NLP is focused primarily on understanding and processing language. It’s great for tasks like language translation, sentiment analysis, or entity recognition, which involve interpreting predefined information.

LLMs, on the other hand, go beyond mere processing; they can generate human-like text and adapt to multiple use cases, from content creation to chatbots. LLMs offer a broader scope, tackling a variety of tasks without needing separate models for each.

Training Paradigm

NLP models are typically trained for specific, targeted tasks like text classification or translation, and they rely heavily on labeled data. LLMs, however, are trained on massive datasets and can generalize across tasks.

LLMs often use unsupervised or semi-supervised learning, allowing them to perform a wide array of functions without requiring custom training for each new task. Their learning approach is more flexible and scalable.

Architectural Design

NLP models are often built using simpler machine learning techniques like decision trees, support vector machines, or recurrent neural networks (RNNs). They tend to focus on task-specific needs.

LLMs, however, use more complex architectures such as transformers, which enable them to handle vast amounts of data and maintain context across long sequences. The transformer design helps LLMs excel in natural language understanding and generation.

Contextual Understanding

NLP models generally analyze text within a limited context, making them less effective at understanding nuances over longer conversations. They might struggle with ambiguous phrases or changing contexts.

LLMs, in contrast, are designed to maintain context over longer interactions, giving them the ability to understand deeper meaning and follow conversations fluidly. This makes them far more effective in handling dynamic, real-time conversations.

Fine-tuning and Transfer Learning

Fine-tuning NLP models typically requires training from scratch or adjusting the model on task-specific data, which can be resource-intensive.

LLMs, however, excel in transfer learning, where they are pre-trained on vast datasets and then fine-tuned on smaller, task-specific datasets. This enables LLMs to perform well across a variety of applications without needing a complete rebuild for every new task.

Resource Intensiveness

Traditional NLP models are relatively resource-efficient. They don’t require massive computational power and can be run on less advanced hardware.

LLMs, however, require a significant amount of computational resources to train and deploy. They rely on high-powered GPUs and cloud infrastructure, as their vast datasets and complex models demand substantial processing power, storage, and memory.

Scalability

NLP models can scale to handle a variety of tasks, but often need separate models for each type of language processing.

LLMs are more scalable, as they are designed to perform multiple tasks from a single model. This makes LLMs far more efficient when applied across different industries, handling everything from text generation to summarization, without the need to build a new model for each specific task.

Integration with Other Tools

NLP models are commonly integrated with specific applications like chatbots, translation tools, or sentiment analysis systems, often requiring additional coding or customization.

LLMs are inherently more flexible and can be easily integrated into a variety of platforms and tools. Their adaptability makes them well-suited for integration with applications that require complex, dynamic responses, such as virtual assistants or customer support systems.

Error Propagation

In NLP models, errors are typically confined to the task at hand—misclassifying a piece of text or failing in entity recognition, for example.

In LLMs, however, errors can propagate across tasks due to their more generalized nature. If an LLM makes a mistake in one area (e.g., text generation), it can lead to larger, more complex errors in subsequent interactions or tasks, potentially making the AI less reliable without proper feedback loops.

Benefits of Combining NLP and LLM

Integrating NLP and LLM technologies opens up endless possibilities by leveraging their unique strengths to deliver more accurate and efficient results. This combination allows developers to create flexible, scalable systems that excel in both structured tasks and dynamic interactions. Here are some benefits of combining them –

- Smarter Data Processing: NLP techniques like tokenization and stop-word removal refine raw data before feeding it into LLMs, improving input quality.

- Balanced Workload: NLP efficiently handles routine tasks like sentiment detection, while LLMs generate rich, context-aware responses.

- Greater Accuracy: NLP’s rule-based methods enhance precision, while LLMs help reduce errors in complex or ambiguous situations.

- Optimized Performance: Lightweight NLP techniques complement resource-heavy LLMs, ensuring efficient use of computing power.

- Engaging Interactions: NLP’s ability to recognize intent, combined with LLMs’ natural conversation skills, leads to more interactive chatbots and virtual assistants.

By merging NLP and LLM capabilities, businesses can strike the perfect balance between efficiency and creativity, making them more effective across both task-specific and broader applications.

NLP or LLM: Deciding Which One to Use

Deciding between NLP and LLM depends on the complexity of the task, resource availability, and the level of contextual understanding required. NLP is best suited for rule-based language processing, such as text classification, sentiment analysis, named entity recognition, and keyword extraction. It works efficiently in structured environments where predefined rules or training data guide the model’s behavior.

On the other hand, LLMs excel in generating human-like responses, understanding context deeply, and handling diverse queries without prior training. They are ideal for applications like conversational AI, long-form content generation, and advanced chatbots. If the goal is to process structured data with efficiency, NLP is the right choice.

However, if the application demands deep contextual understanding and flexibility, LLMs provide better results. In many cases, combining both offers the most optimized solution, where NLP handles preprocessing and rule-based tasks while LLMs enhance the interaction with dynamic and adaptive responses.

Future Scope of LLM and NLP

Humanized AI Assistants

The future of LLMs and NLP in humanized AI assistants looks promising, as they enable more natural and dynamic conversations. These assistants will not only understand context and nuances but also respond in a human-like manner.

With advancements in NLP and LLMs, AI assistants will become more intuitive, empathetic, and able to handle a wider range of tasks, making them more integrated into our daily lives and businesses.

Improved Robotics Language

NLP and LLMs will play a key role in improving robotics communication by allowing robots to understand and process natural human language more effectively.

This will enhance the ability of robots to interact with people in more complex, intuitive ways, whether it’s in customer service, healthcare, or industrial settings. Expect more sophisticated, context-aware robotic systems that can adapt and respond to dynamic human language with greater accuracy.

Automated Content Generation

The future of content creation will be heavily influenced by LLMs, capable of generating high-quality, engaging content with minimal human input. From blog posts to social media updates, LLMs will streamline content workflows, ensuring speed and consistency.

As these models improve, they will handle not just simple copywriting but also more complex tasks like creative writing and personalized marketing messages, making content generation more efficient and scalable.

A Bottom Line

NLP and LLMs are groundbreaking technologies, each with its own strengths. NLP excels in task-specific, rule-based applications, while LLMs push the limits with massive datasets and a deep understanding of context. When combined, they create a powerful synergy that enables more intelligent and adaptable systems, bridging the gap between human language and AI.

By working with top development companies like BigDataCentric, you can leverage these technologies to unlock new opportunities, drive innovation, and improve user experiences across various industries.

FAQs

-

Is it possible to fine-tune NLP and LLM for specialized tasks?

Yes, both can be fine-tuned. Traditional NLP models use rule-based or machine learning techniques, while LLMs are fine-tuned with domain-specific data for improved accuracy in specialized fields like healthcare or finance.

-

Which approach is better for smaller projects?

Traditional NLP models are better as they require fewer resources and are cost-effective for tasks like sentiment analysis and text classification. LLMs are powerful but may be overkill for small-scale applications.

-

Can LLMs and NLP be used together?

Yes, LLMs and NLP can be used together to create more efficient and scalable AI-driven solutions. For example, an LLM can be integrated with traditional NLP pipelines to enhance text understanding, summarization, or question-answering while using NLP techniques to preprocess data, extract key information, and optimize model outputs. This hybrid approach balances efficiency, cost, and accuracy.

-

Are there any ethical concerns with using LLMs in NLP applications?

Yes, there are concerns about biases in LLMs, as they are trained on vast datasets that may contain biased or inappropriate content. Ensuring fairness, transparency, and accountability in the use of LLMs is a critical issue in NLP applications.

About Author

Jayanti Katariya is the CEO of BigDataCentric, a leading provider of AI, machine learning, data science, and business intelligence solutions. With 18+ years of industry experience, he has been at the forefront of helping businesses unlock growth through data-driven insights. Passionate about developing creative technology solutions from a young age, he pursued an engineering degree to further this interest. Under his leadership, BigDataCentric delivers tailored AI and analytics solutions to optimize business processes. His expertise drives innovation in data science, enabling organizations to make smarter, data-backed decisions.

Table of Contents

Toggle